Apple has introduced a new feature to its camera system that automatically recognizes and transcribes text in your photos, from a phone number on a business card to a whiteboard full of notes. Live Text, as the feature is called, doesn’t need any prompting or special work from the user — just tap the icon and you’re good to go.

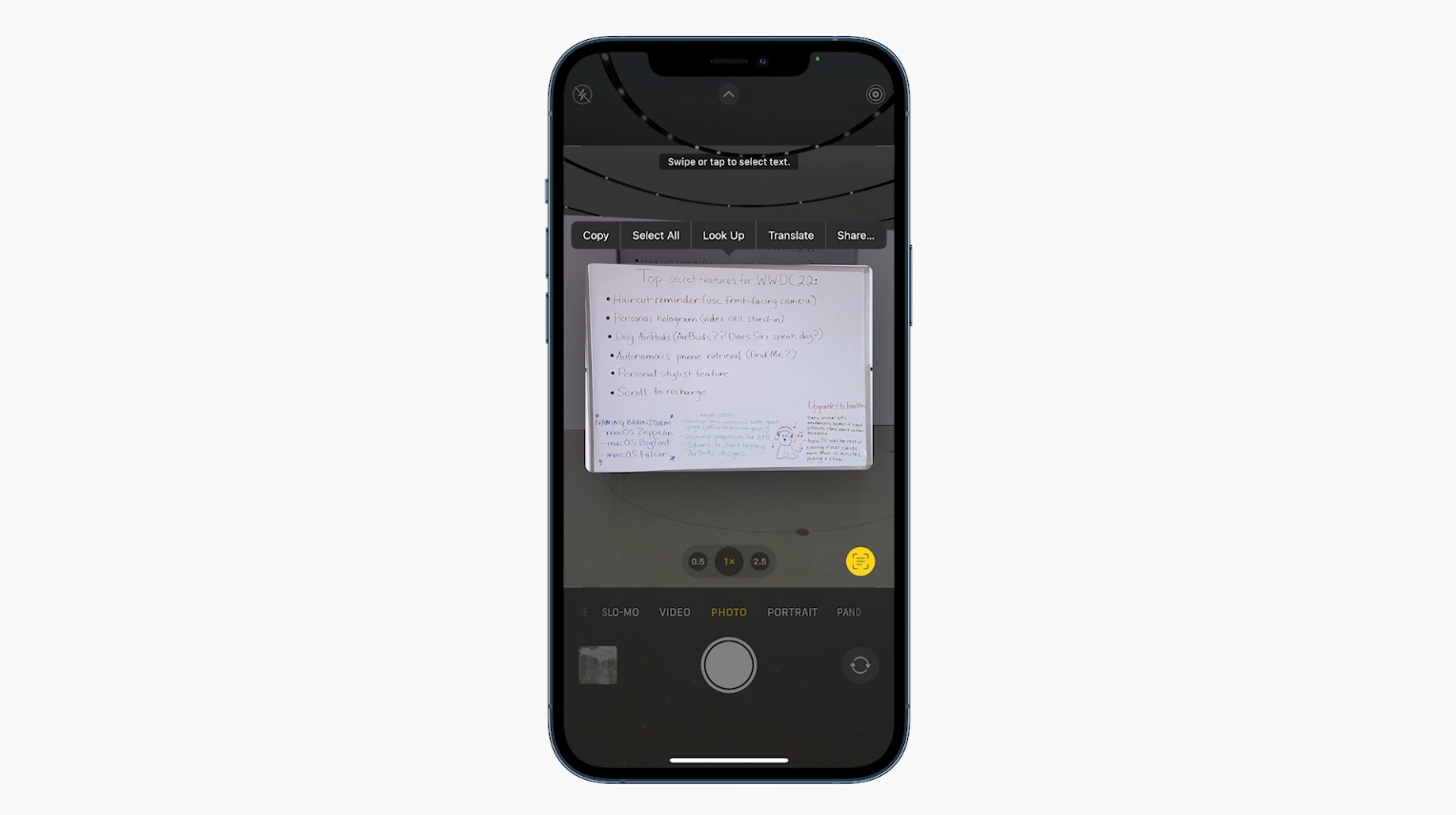

Announced by Craig Federighi on the virtual stage of WWDC, Live Text will be arriving on iPhones with iOS 15. He demonstrated it with a couple pictures, one of a whiteboard after a meeting, and a couple snapshots that included restaurant signs in the background.

Tapping the Live Text button in the lower right gave detected text a slight underline, and then a swipe allowed it to be selected and copied. In the case of the whiteboard, it collected several sentences of notes including bullet points, and with one of the restaurant signs it grabbed the phone number, which could be called or saved.

The feature is reminiscent of many found in Google’s long-developed Lens app, and the Pixel 4 added more robust scanning capability in 2019. The difference is that the text is captured more or less passively in every photo taken by an iPhone running the new system — you don’t have to enter scanner mode or launch a separate app.

This is a nice thing for anyone to have, but it could be especially helpful for people with visual impairments. A snapshot or two makes any text, otherwise difficult to read, able to be dictated or saved.

The process seems to take place entirely on the phone, so don’t worry that this info is being sent to a datacenter somewhere. That also means it’s fairly quick, though until we test it for ourselves we can’t say whether it’s instantaneous or, like some other machine learning features, something that happens over the next few seconds or minutes after you take a shot.

from TechCrunch https://ift.tt/2RzHyTQ

No comments:

Post a Comment